In the world of nonlinear dynamic systems, a small change can have a huge impact elsewhere. Think about the climate, the human brain, or even the electric grid. These systems are constantly evolving and can be incredibly difficult to predict. But in the last couple of decades, scientists have made some exciting breakthroughs using a powerful machine-learning technique called reservoir computing.

Reservoir computing has proven to be effective in modeling complex chaotic behaviors, even with limited training data. It can predict the trajectory of chaotic systems and even determine their final outcome based solely on initial conditions. This has sparked a lot of excitement among researchers, including Yuanzhao Zhang, an SFI Complexity Postdoctoral Fellow.

However, Zhang couldn’t help but be skeptical. Could reservoir computing really live up to its promises? In a new paper published in Physical Review Research, Zhang and physicist Sean Cornelius from Toronto Metropolitan University shed light on some overlooked limitations of reservoir computing. They discovered a kind of Catch-22 situation that can be particularly challenging for complicated dynamic systems.

Reservoir computing is a predictive model built with neural networks that is simpler and more cost-effective to train compared to other frameworks. It has been around for over 20 years, but recent advancements, known as next-generation reservoir computing (NGRC), have made it even more powerful. NGRC requires less training data and has shown promise in modeling dynamic systems.

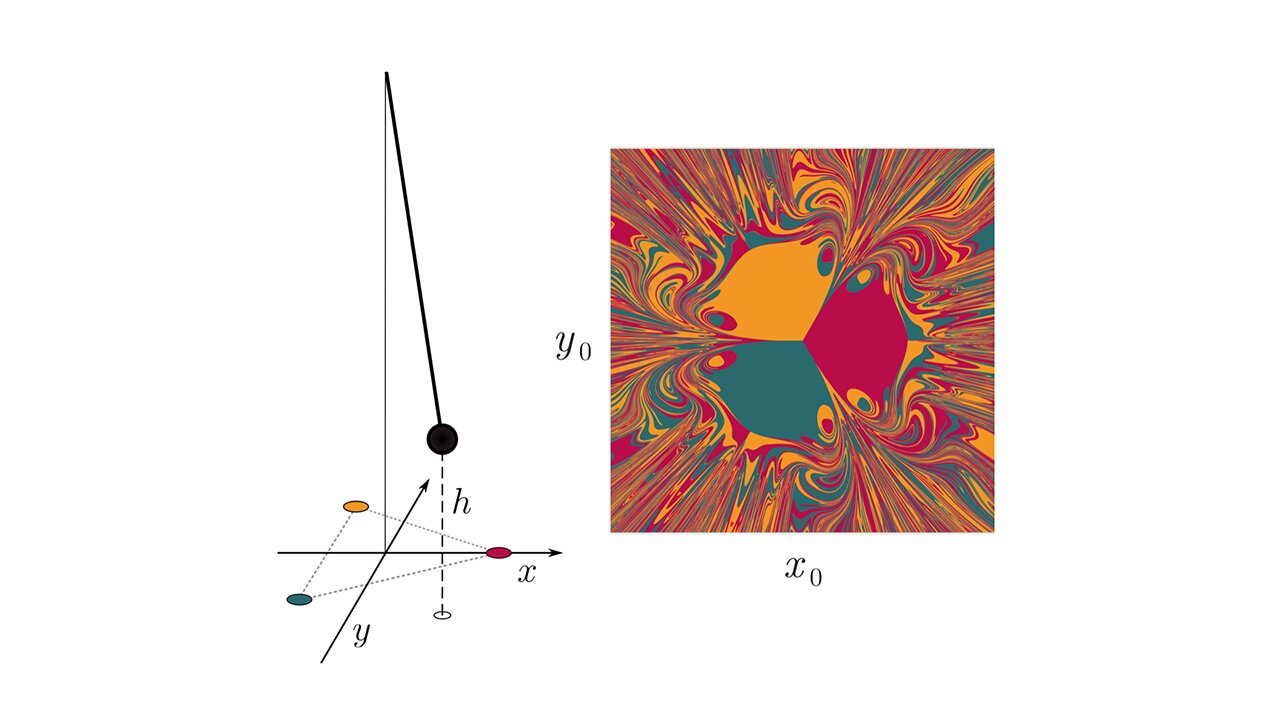

Zhang and Cornelius examined both standard reservoir computing and NGRC and found that they both have their limitations. For NGRC, they studied a simple chaotic system involving a pendulum with a magnet swinging among three fixed magnets. They discovered that the model performed well when given information about the system’s nonlinearity beforehand. However, if the model was perturbed, its predictions became inaccurate.

As for standard reservoir computing, the researchers observed that it required a lengthy “warm-up” time to accurately predict the system’s behavior, almost as long as the dynamic movements of the magnet itself.

Understanding and addressing these limitations in both reservoir computing and NGRC could greatly enhance their usefulness in modeling dynamic systems. This research opens up new possibilities for researchers to harness the power of these computing frameworks and make more accurate predictions with less data.